Changes in technology have increased our capacity to interface with existing tools and measures in social sciences and healthcare research. Demands for increased accessibility and preference for flexibility in data collection have resulted in an influx of technological innovations and adaptations of existing tools. Yet, the usability of digital adaptations of self-reported measures have not been well studied. This research investigated the comparability and reliability of the empirically-validated Multi-System Model of Resilience Inventory through two modalities: an online survey platform, and a digital mobile application. User experiences of the mobile application in research was also studied. Implications of the findings for the use of digital applications in research in healthcare and social sciences are discussed.

The changing landscape of technological innovations have shifted our everyday interactions. Digital technologies offer new opportunities to engage and create solutions to address research challenges. For example, advancements have changed the access to different populations, reduce language and physical barriers, and increase accessibility of research participants (Aguilera & Muench, 2012).

Research in social sciences have gradually shifted from experimental studies with small samples collected in-person, to big data collected over digital mediums. Marszalek et al. (2011) conducted a systematic review of the literature and found demands for increased sample sizes in psychological research. With the practical limitations of in-person experimental studies, researchers have adopted novel approaches, such as digital psychotherapies offered via mobile and smartphones (e.g., Aguilera & Muench, 2012), web-based applications to engage participants (e.g., Parks, Williams, Tugade, Hokes, Honomichl, & Zilca, 2018), and an increased reliance on self-report measures to facilitate the measurement of constructs and collection of data to accommodate these online settings (Sassenberg & Ditrich, 2019).

Demand and Benefits of Digitization of Research Tools

Although traditional pen-and-paper battery of psychological measures are still utilized in data collection today, digital innovations have increased the availabilities of technological integration in research, particular, the ways in which we collect data. The use of popular survey-hosting sites, such as SurveyMonkey®, QualtricsXM, and other platforms have created an alternative to pen-and-paper surveys without significant changes to the format and appearance of traditional questionnaires. Indeed, the use of online survey platforms offer great benefits for researchers, including facilitation of more efficient and increased capacity for expansive data collection, such as remote and off-site data collection, directly exporting participant responses, and reducing the need for paper-and

Digital and online administration through survey platforms also have advantages for individual participants and users. They provide increased opportunities for individuals to participate in research studies without having to attend sessions in-person, create perceived anonymity associated with their participation, and ease and convenience of accessibility of research studies. Research evidence also support the positive outcomes of online data collection, with studies comparing the psychometric properties of online versus pen-and-paper surveys finding no significant differences in the quality of the outputs in the measures studied,while noting the relative convenience of use for both researchers and users (Joubert et al., 2009; Ward et al., 2014).

Beyond online survey platforms, another avenue for technological integration in research is the use of mobile applications. Mobile solutions have experienced rapid growth paralleling the expanding needs of healthcare research (Aguilera & Muench, 2012). In particular the burgeoning mobile application industry may assist in filling gaps (e.g. access to health information) in community and gaps in health and social sciences research (e.g. access to diverse populations; Gan & Goh, 2016). Similar to online survey platforms, the use of smartphone based self-report data collection can be implemented across wide geographical regions, may be cost effective, and allow sampling from large population groups (Gan & Goh, 2016). Yet, mobile applications may also provide unique benefits beyond the scope of survey platforms for both the researchers and individual user/participants.

For researchers, mobile applications provide a more attractive option to engage and interact with participants, create a virtual environment to encapsulate the research experience, and allow researchers to exert greater experimental control in the degree of knowledge shared during the user experience. For participating individuals, the benefits extend to a more accessible, user-friendly, engaging experience, and an opportunity to learn more information that may be seen as personalized and even gamified, adding to incentives and increased buy-in at the user-level. Indeed, Aguilera and Muench (2012) found that over 75% of individuals surveyed expressed interest in using mobile phones for information and

Challenges for Mobile Applications in Research

The increased utilization of digital technologies to conduct research also create novel challenges and considerations, particularly in relation to the use of mobile applications. A previous review of the literature found that while digital applications are evolving in response to the need for psychological testing, most available applications did not utilize validated psychological measures (Gan & Goh, 2016). For example, when searching for specific tasks, such as the Tower of Hanoi or Stroop, many apps claim to have adapted them, but little evidence exist to support these claims. Furthermore, researchers have also cautioned against the use of validated instruments in different formats than the one used during validation, with consideration to the stability and potential biases these alternative mediums may cause on the psychometric properties of the tool (McCoy et al., 2003). Indeed, few studies have compared delivery modalities (Noyes & Garland, 2008). In addition, many of these adaptations are gamified which emphasizes user entertainment rather than reliability and validity of the tools themselves (Gan & Goh, 2016). The lack of empirical evidence exploring the comparability and reliability is especially problematic as the individual users may be misinformed regarding the interpretation of outcomes. Thus, it remains unclear whether results are truly comparable across digital application modalities compared to pen-and-paper and/or online versions of the same tools.

Rationale and Objectives

The goal of the current study is to explore the comparability between the mobile application of a self-report tool with its online survey counterpart. In particular, this paper will compare the reliability of scores between a standard inventory to the digital app-based scores of the same resilience inventory in samples of university students, and examine their attitudes towards the use of mobile applications. The mobile adaptation of a resilience measurement was selected due to its appeal and popularity in the general population. The term and concept of resiliency have captured the attention of popular media. While some ambiguities exist in its operationalization and use in research (Liu, Reed, & Girard, 2017), the notion of resiliency remains a particularly important construct for the general population as it conveys powerful narratives in relation to wellbeing despite challenges and adversity. Indeed, resiliency is often marketed as a goal of many mental health-related digital applications available on the market today (Olu et al., 2019).

Participants

Participants were university students from diverse academic disciplines, study programmes, age, background, and enrolled in the introduction to psychology courses. Students had the option to participate in psychological testing for course credit as part of the experiential learning offered at the university. The study obtained ethics approval from Ryerson University in Toronto, Canada (REB 2017-230).

Participants included 356 students (n = 270 females; n = 86 males) who had completed the standard version of the questionnaire via an online survey platform. In addition, 110 participants (n = 94 female, n = 16 male) completed the mobile version of the questionnaire through a mobile application. Participants in the study ranged between 16 and 54, had a mean age of 21, and the sampled populations did not differ significantly across age and gender distributions.

Measures and Modalities

Study participants completed a validated self-reported inventory on resilience, the Multi-System Model of Resilience Inventory (MSMR-I); either as a standard tool delivered through an online survey platform (Qualtrics XM) to retain its appearance, or as a digital mobile application for smartphone and tablets (MSMR / Multi-System Resilience © 2019). The MSMR-I is a 27-items tool that conceptualizes resilience as an evolving capacity sourced from three systems, internal resilience or health and health-related behaviours that determine our resilience, external resilience or sociocultural determinants of resilience, and coping & pursuits or individual personality-correlates and skills developed over time (Liu, Reed, & Girard, 2017; Multi-System Resilience, 2019). Questions were scored on a 4-point Likert-type scale, with 0 corresponding to “not at all like me”, and 3 to “very much like me”. The internal consistency for MSMR-I has Cronbach’s α = .90 to .91 overall, and .75 to .85 among the three systems within MSMR (Multi-System Resilience, 2019).

The online MSMR-I survey was loaded onto a white screen with black font. Rather than circling the statement that best represents the individual’s perspectives, participants clicked on the appropriate response. Upon completion, participants were thanked for their participation, and debriefed without receiving any further information.

In the mobile format, the MSMR-I was delivered through a mobile application (MSMR/Multi-System Resilience© 2019). Although the standard format contained an introductory text regarding resilience, the digital application divided the introduction into four screens. The first screen introduced the tool as empirically validated, the second screen defined resilience, the third screen explained the questionnaire and outcomes expected, while the fourth was a disclaimer of the limitations of its use. From there, participants were guided to a set of questions which were asked one at a time. Once all 27 items were answered, participants were given their overall resilience score and subscale scores, along with brief descriptions of what each score meant.

Procedure

Upon signing up for the study, participants, who completed the digitized version of the MSMR-I, were sent an online invitation with details about the study, instructions for digital application download, and a survey to complete following the application that instructed participants to input their scores obtained on the mobile application. After a week, participants were asked to access the digital application once more, and input the new scores obtained. In addition, participants were asked to complete additional feedback about the application including questions regarding their attitudes associated with the use of the app, and an open-ended question on their user experience.

Data Analyses

Quantitative data were examined using the Statistics Package for Social Sciences (SPSS) Version 25. Descriptive, independent t-tests, and correlations were used to examine the reliability of the digital application data versus standard questionnaires on overall scores and subscale scores of the MSMR-I. Qualitative data analysis regarding application feedback were conducted using NVivo software.

Comparability of Digital Mobile Application vs. Standard Online Survey

An independent-samples t-test was conducted to compare MSMR-I scores in standard format to the digital applications scores. No significant differences were detected on resilience scores of MSMR-I between standard (M = 48.79, SD = 13.38) and digital application (M = 47.25, SD = 16.36) for males, t(67) = 0.38, p = .70. Similarly, no significant differences were detected on resilience scores of MSMR-I between standard format (M = 45.87, SD = 14.06) and digital application (M = 44.13, SD = 13.39) for females, t(350) = 1.04, p = .30.

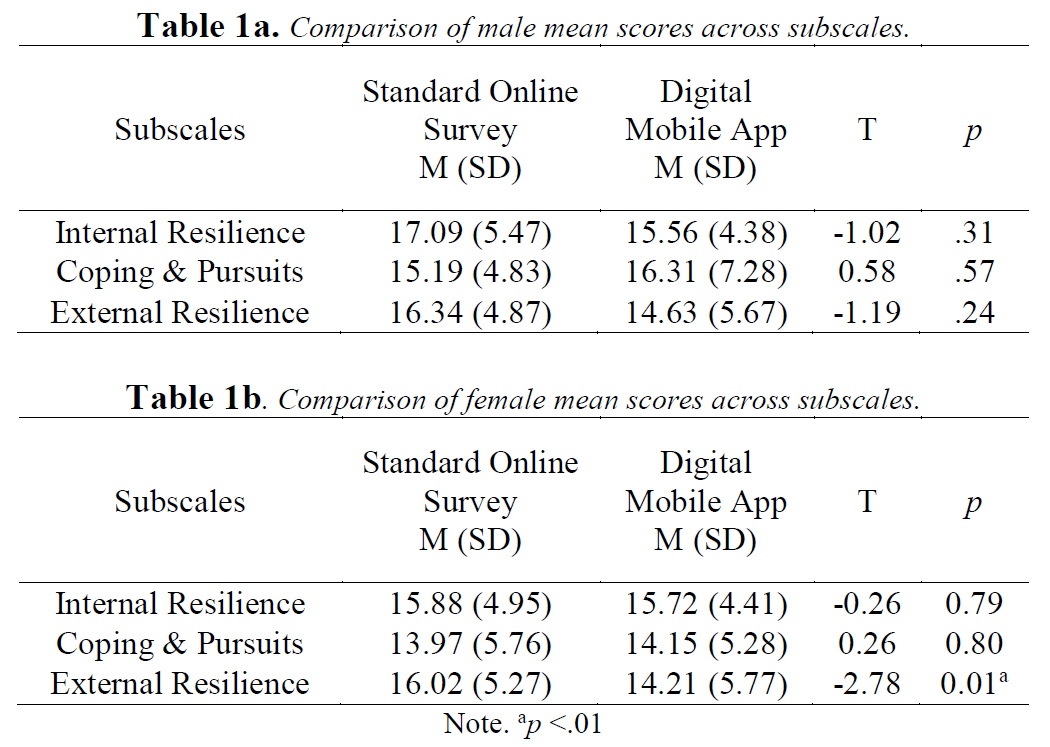

Subscale scores were also compared between males and females on standard and mobile applications. Subscales were comparable, with no significant differences between modalities and genders; except that for external resilience, females using the paper and pencil modality scored significantly higher on resilience than those using the mobile app. Results are shown in Tables 1a (males) and 1b (females).

Test-retest Reliability

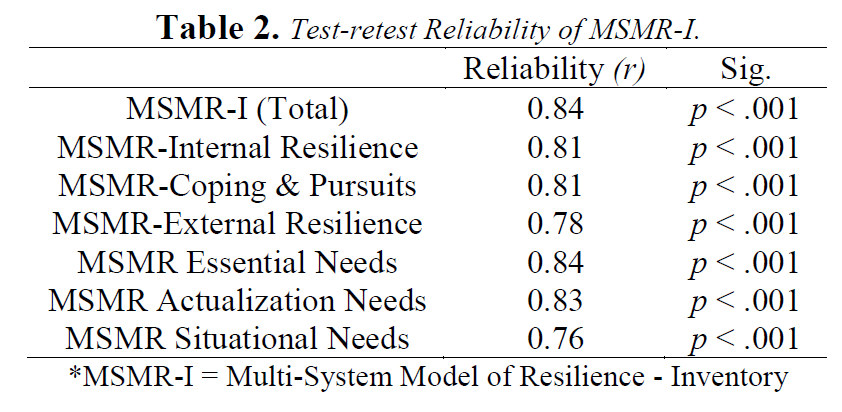

To gauge whether the MSMR-I app can be reliably used over time, we examined the reliability over a two-week period using Pearson’s correlations. Results of the overall scale and subscales are found on Table 2 and indicate good reliability over this period.

Application Utilization

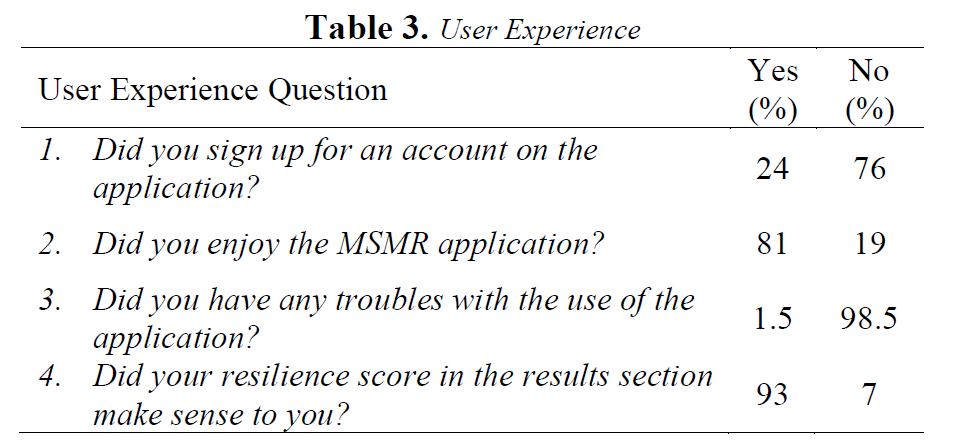

Frequency data was generated to track the digital application’s utilization. Participants using the digital application were asked whether they signed up for an individual account, their enjoyment of the application, identified any trouble(s) with navigating the application, and whether their results made sense to them. Frequency data for each of the questions are summarized in Table 3.

Qualitative Themes Regarding App Usability

Finally, we asked participants to provide open feedback regarding their use of the digital application. A total of 35 responses were collected. Each response was examined and coded line-by-line in thematic analyses through NVivo. We coded responses based on four general categories: likes (e.g., “It was very helpful in the sense that there was a lot of information accessible to the user and it was very conveniently placed”), dislikes (“It is too general.”), suggested improvements (e.g., “I think it would have been better if an explanation on how the questions were chosen were included, I’d like to know how these questions are reliable in measuring our ability to cope with everyday challenges.”), and others (e.g., “How to use the app”).

We observed clusters of similar commentaries within the positive feedback expressing likeness for the application. We then grouped similar feedback based on emerging themes including general liking of the content (e.g., “It’s helpful since it makes you self-reflect for 30 minutes of your day and make you reassess what’s important in your life. Something I definitely don’t do enough.”), and positive feedback regarding utility and functionality of the digital application (e.g., “Easy to read and navigate.”; “exploring more on internal and external resilience, coping pursuit, and finding ways to maximize and strengthen these areas if someone scored a low score in these areas.”).

General liking

Of the feedback received, approximately one-third included commentary regarding how much they liked the digital application. Unlike a pen-and-paper tool, the app allowed for immediate access to scores, comparisons to norms and further information surrounding resilience. For many users, the ease of accessing useful information in digital format is a main attraction. Past research has found that the accessibility of health-related information draws engagement and motivation to engage in changes in perceptions, beliefs, and attitudes towards health-related behaviours (Crookston et al., 2017; Peng et al., 2016). Furthermore, liking and overall satisfaction with an app is an important determinant in the adaptability and usability of a mobile application (Liew et al., 2019). Indeed, feedback from the current study reflected that the app content helped explain resilience, understand themselves better, and lead to self-reflection and reassessment of their sources of resilience in their lives.

Utility and functionality

Another theme identified through participant feedback is the usability and utility of a digital mobile application on resilience. Past research has highlighted that the ease of navigation and comfort of applications can serve as barriers in accessing mobile applications (Bol et al., 2017; Peng at el., 2016). Indeed, the overall user experience has been proposed as an important framework for the consideration of adaptation for mobile health technologies (Garcia-Gomez et al., 2014). Participant feedback converged to reflect positive functionality of the MSMR mobile application. They noted the ease of navigation, straightforward use of the application, and the information presented through the mobile application.

Changes in the availability and utilization of digital technologies have created opportunities in research using digital mobile applications. In response to the lack of valid tools and measures incorporated into mobile applications (Harrison & Goozee, 2014), and the lack of evidence comparing the reliability across modalities, we sought to determine whether a psychometric inventory of resilience yielded comparable results between a mobile application and its standard format counterpart, and explored general attitudes associated with the use of the MSMR resilience mobile application. Outcomes of the current study suggest comparable results, with additional benefits that can incentivize users and participants.

Comparability and Reliability of Data Collected via Mobile Application

Scores obtained from student samples who have completed the standard version and the digital application version of the MSMR-I did not differ significantly in their overall scoring. Addition subscale comparisons between mobile and standard modalities did not differ across genders with the exception of the external resilience subscale for females. Despite the comparability of the overall scores, subtle differences observed in the external resilience subscales for females can suggest several possibilities. First, there may be differences in how individuals may relate to questions across the two modalities. For example, past research noted that while overall scores did not differ across digital survey and pen-and-paper modalities, subtle changes may be present, especially if questions pertain to the self in relation to social elements of their identity (Hollandare et al., 2010, McCoy et al., 2004). For external subscale questions, greater heterogeneity may exist in both between and within individuals as perceived social circumstances and identity may be subject to natural fluctuations, even over a short duration. Indeed, the external resilience subscale of the MSMR-I had the lowest test-retest reliability compared to other subscales. Due to concerns of exposure to both standard and mobile formats of the tool, we utilized a cross-sectional design to compare scores between the two modalities. However, future research utilizing a repeated measures design with counterbalancing may help inform these subtle differences observed in external resilience subscales of female student participants.

Individual resilience scores of the MSMR-I completed on mobile app over a two-week period suggest that the test-retest reliability of the inventory is high for both the overall measure and across subscales. Indeed, the reliability statistics via the MSMR digital application were comparable to the test-retest reliability reported for other resilience measures over a similar two-week period (Prince-Embury, Saklofske, & Vesely, 2015). Taken together, findings from the current study suggest MSMR-I can reliably be adapted and used in a mobile application for various research-related purposes, with comparable qualities. Results further support previous research exploring the comparability of different modalities of assessment deliveries, particularly between online survey platforms and pen-and-paper formats (Joubert et al., 2009; Ward et al., 2014).

Considerations of Digital Mobile Application in Research

While researchers have urged the importance of prior investigation before adaptations of any task or tool (Noyes & Garland, 2008); our study supports that tools can be adapted to digital formats and that such adaptations have both advantages and considerations for both researchers and users. Overall, attitudes regarding the use of mobile application for resilience appear largely positive. In the sampled population, over 80% of respondents indicated they enjoyed the application. In addition, of those that provided open-ended feedback, a large majority were dedicated to discussing themes of what they liked about the application, which included its content, usability, and functionality in a digital format. These findings are in-line with previous research demonstrating preference to engage across digital interfaces, such as digital magazines (Luna-Nevarez & McGovern, 2018).

There are clear advantages of utilizing mobile applications in research. Researchers can control the application environment, such as the configuration, the provision of information accessible to the users, and facilitate remote research and data collection. All the above provide additional value to the researchers above and beyond those offered by online survey platforms. For the participant, they may experience additional benefits such as obtaining personalized information in an engaging virtual environment. Participants can better engage with the content and/or task, thereby increasing the immersion and value to the individual user.

Despite the positive regard of the app, only a small percentage (24%) created an account on the digital application. This may be in part due to concerns regarding the privacy of individual data, which should be considered in research designs. Although the perceived anonymity of digitized applications may lead users to be less affected by social convention, this may affect the context of use of the tool; that is users may respond in unexpected ways that are driven by the private nature of responses in an app. Indeed, considerations of anonymity and other contextual elements that may create biases in perceptions of the tool are important considerations for researchers when adapting and utilizing digital mobile applications in research (McCoy et al., 2004). Thus, it is important that researchers and knowledgeable users consider these potential outcomes when adapting measures for various purposes.

The integration of novel innovations in digital technologies for research may be more relevant for select groups, such as those with strong preference for digital communications, population groups that are not easily accessible, and those that benefit from relevant information shared through digital mediums (Bender, Choi, Arai, Paul, Gonzalez, & Fukuoka, 2014; Gallagher et al., 2017). Emphasis should be placed on the appropriateness of the mobile application in consideration of age and other related demographic of the intended audience.

Given these considerations, future development and digitalization of validated tools should carefully balance data collection needs with purposeful integration of mobile technologies in research and practice. The integration of mobile applications holds many promises for research and practice. In healthcare settings, professionals may seek to integrate self-report tools and inventories to monitor their clients’ development and progress in-between scheduled sessions. In structured settings, such as a corporation and/or community organization, the utilization of validated measures through digital applications may ease the onboarding process for employees and/or personnel in facilitating tailored support to match their ongoing needs. Finally, for the individual user, the opportunity to access reliable and validated tools may provide important insights at their own convenience, thereby increasing access to important knowledge.

The digital revolution has expanded our capacity to engage and interface with existing tools and everyday devices for research purposes. With innovations in smartphone and wearable technologies, advancements have availed novel modes of data collection through biophysiological indexes, facial recognition software, and repeated sampling of data points (Gan & Goh, 2016; Miller, 2012). Findings from the current study suggest that mobile applications can be comparable to their pen-and-paper/conventional online survey formats; with some added advantages of engagement in the mobile application. To our knowledge, the current study is the first to examine the comparability of standard formats of self-reported measure with adaptations in mobile applications. Results of the current study support the integration of empirically validated measures into health-related mobile applications and suggest that these applications are well-liked by participants.

This work was supported by the Royal Bank of Canada’s Partnership for Change: Immigration, Diversity, and Inclusion Project Award.

The authors declare no competing interests.

JJW Liu conceptualized the study, collected data, analysed data, and contributed to the preparation of the manuscript. J Gervasio assisted with data collection, analysis, and preparation of the manuscript. M Reed supervised the study, helped conceptualize the study ideas, and contributed to the data analyses and final manuscript draft.

Aguilera, A., & Muench, F. (2012). There’s an app for that: information technology applications for cognitive behavioral practitioners. Behavior Therapy, 35, 65-73. Retrieved from https://www.ncbi.nlm.nih.gov/pubmed/25530659.

Bender, M. S., Choi, J., Arai, S., Paul, S. M., Gonzalez, P., & Fukuoka, Y. (2014). Digital technology ownership, usage, and factors predicting downloading health apps among caucasian, filipino, korean, and latino americans: the digital link to health survey. JMIR Mhealth Uhealth, 2, e43. doi:10.2196/mhealth.3710.

Bol, N., Helberger, N., & Weert, J. C. M. (2018). Differences in mobile health app use: A source of new digital inequalities. The Information Society, 34, 183-193. doi:10.1080/01972243.2018.1438550.

Crookston, B. T., West, J. H., Hall, P. C., Dahle, K. M., Heaton, T. L., Beck, R. N., & Muralidharan, C. (2017). Mental and Emotional Self-Help Technology Apps: Cross-Sectional Study of Theory, Technology, and Mental Health Behaviors. JMIR Mental Health, 4, e45. doi:10.2196/mental.7262.

Gallagher, R., Roach, K., Sadler, L., Glinatsis, H., Belshaw, J., Kirkness, A., . . . Neubeck, L. (2017). Mobile Technology Use Across Age Groups in Patients Eligible for Cardiac Rehabilitation: Survey Study. JMIR Mhealth Uhealth, 5, e161. doi:10.2196/mhealth.8352.

Gan, S. K., & Goh, B. Y. (2016). Editorial: A dearth of apps for psychology: the mind, the phone, and the battery. Scientific Phone Apps and Mobile Devices, 2. doi:10.1186/s41070-016-0005-6.

García-Gómez, J. M., de la Torre-Díez, I., Vicente, J., Robles, M., López-Coronado, M., & Rodrigues, J. J. (2014). Analysis of mobile health applications for a broad spectrum of consumers: A user experience approach. Health Informatics Journal, 20, 74-84. doi:10.1177/1460458213479598.

Harrison, A. M., & Goozee, R. (2014). Psych-related iPhone apps. Journal of Mental Health, 23, 48-50. doi:10.3109/09638237.2013.869575.

Holländare, F., Andersson, G., & Engström, I. (2010). A comparison of psychometric properties between internet and paper versions of two depression instruments (BDI-II and MADRS-S) administered to clinic patients. Journal of Medical Internet Research, 12, e49. doi:10.2196/jmir.1392.

Joubert, Tina, & Kriek, Hendrik J. (2009). Psychometric comparison of paper-and-pencil and online personality assessments in a selection setting. SA Journal of Industrial Psychology, 35, 78-88. Retrieved November 25, 2019, from http://www.scielo.org.za/scielo.php?script=sci_arttext&pid=S2071-07632009000100009&lng=en&tlng=en.

Liew, M. S., Zhang, J., See, J., & Ong, Y. L. (2019). Usability Challenges for Health and Wellness Mobile Apps: Mixed-Methods Study Among mHealth Experts and Consumers. JMIR Mhealth Uhealth, 7, e12160. doi:10.2196/12160.

Liu, J. J. W., Reed, M., & Girard, T. A. (2017). Advancing resilience: An integrative, multi-system model of resilience. Personality and Individual Differences, 111, 111-118. doi:10.1016/j.paid.2017.02.007.

Luna-Nevarez, C., & McGovern, E. (2018). On the Use of Mobile Apps in Education: The Impact of Digital Magazines on Student Learning. Journal of Educational Technology Systems, 47, 17-31. doi:10.1177/0047239518778514.

Marszalek, J. M., Barber, C., Kohlhart, J., & Holmes, C. B. (2011). Sample size in psychological research over the past 30 years. Perceptual and Motor Skills, 112, 331-348. doi:10.2466/03.11.PMS.112.2.331-348.

McCoy, S., Marks, P. V., Carr, C. L., & Mbarika, V. (2004). Electronic versus paper surveys: analysis of potential psychometric biases. Proceedings from 37th Annual Hawaii International Conference on System Sciences.

Miller, G. (2012). The Smartphone Psychology Manifesto. Perspect Psychol Sci, 7, 221-237. doi:10.1177/1745691612441215.

Multi-System Resilience. (2019). Researching resilience. Retrieved from https://www.msmrtool.com/research/

Noyes, J. M., & Garland, K. J. (2008). Computer- vs. paper-based tasks: are they equivalent. Ergonomics, 51, 1352-1375. doi:10.1080/00140130802170387.

Olu, O., Muneene, D., Bataringaya, J. E., Nahimana, M. R., Ba, H., Turgeon, Y., . . . Dovlo, D. (2019). How Can Digital Health Technologies Contribute to Sustainable Attainment of Universal Health Coverage in Africa? A Perspective. Frontiers in Public Health, 7, 341. doi:10.3389/fpubh.2019.00341.

Parks, A. C., Williams, A. L., Tugade, M. M., Hokes, K. E., Honomichl, R. D., & Zilca, R. D. (2018). Testing a scalable web and smartphone based intervention to improve depression, anxiety, and resilience: A randomized controlled trial. International Journal of Wellbeing, 8, 22-67. doi:10.5502/ijw.v8i2.745.

Peng, W., Kanthawala, S., Yuan, S., & Hussain, S. A. (2016). A qualitative study of user perceptions of mobile health apps. BMC Public Health, 16, 1158. doi:10.1186/s12889-016-3808-0.

Prince-Embury, S., Saklofske, D. H., & Vesely, A. K. (2015). Measures of Resiliency. In Measures of Personality and Social Psychological Constructs (pp. 290-321). Elsevier. doi:10.1016/b978-0-12-386915-9.00011-5

Sassenberg, K., & Ditrich, L. (2019). Research in Social Psychology Changed Between 2011 and 2016: Larger Sample Sizes, More Self-Report Measures, and More Online Studies. Advances in Methods and Practices in Psychological Science, 2, 107-114. doi:10.1177/2515245919838781.

Ward, P., Clark, T., Zabriskie, R., & Morris, T. (2014). Paper/Pencil Versus Online Data Collection. Journal of Leisure Research, 46, 84-105. doi:10.1080/00222216.2014.11950314.